Mengambil dataset batu gunting kertas atau rockpapersiccors

!wget --no-check-certificate \ https://d.tau.fan/rockpaperscissors.zip \ -O /tmp/rockpaperscissors.zip

data latih dan data validasi# Extract dataset zip import zipfile,os # import splitfolders local_zip = '/tmp/rockpaperscissors.zip' zip_ref = zipfile.ZipFile(local_zip, 'r') zip_ref.extractall('/tmp') zip_ref.close() # mendefinisikan nama direktori untuk `data latih`` dan `data validasi`` base_dir = '/tmp/rockpaperscissors/rps-cv-images' # splitfolders.ratio(base_dir, output=base_dir, ratio=(0.8,0.2)) # train_dir = os.path.join(base_dir, 'train') # validation_dir = os.path.join(base_dir, 'val')

latih dan direktori validasi ke dalam variabel# # membuat direktori batu pada direktori data training # train_rock_dir = os.path.join(train_dir, 'rock') # # membuat direktori gunting pada direktori data training # train_scissor_dir = os.path.join(train_dir, 'scissors') # # membuat direktori kertas pada direktori data training # train_paper_dir = os.path.join(train_dir, 'paper') # # membuat direktori batu pada direktori data validasi # validation_rock_dir = os.path.join(validation_dir, 'rock') # # membuat direktori gunting pada direktori data validasi # validation_scissor_dir = os.path.join(validation_dir, 'scissors') # # membuat direktori kertas pada direktori data validasi # validation_paper_dir = os.path.join(validation_dir, 'paper')

ImageDataGenerator untuk data training dan data testing# proses augmentasi gambar pada sampel data traininng train_datagen = ImageDataGenerator(rescale=1./255, rotation_range=20, shear_range = 0.2, horizontal_flip=True, fill_mode = 'wrap', validation_split = 0.4) # ImageDataGenerator(rescale=1./255, # rotation_range=20, # horizontal_flip=True, # shear_range = 0.2, # validation_split = 0.4, # fill_mode = 'nearest') # proses augmentasi gambar pada sampel data testing test_datagen = ImageDataGenerator(rescale=1./255, zoom_range = 0.2, shear_range = 0.2, horizontal_flip=True, validation_split = 0.4)

# persiapan data training train_generator = train_datagen.flow_from_directory( base_dir, # mengubah resolusi seluruh gambar menjadi 150x150 piksel target_size=(100, 150), shuffle = True, subset='training', class_mode='categorical') # persiapan data validasi validation_generator = train_datagen.flow_from_directory( base_dir, # mengubah resolusi seluruh gambar menjadi 150x150 piksel target_size=(100, 150), subset='validation', class_mode='categorical')

model = tf.keras.models.Sequential([ # Conv2D untuk mengekstrak atribut pada gambar tf.keras.layers.Conv2D(32, (3,3), activation='relu', input_shape=(100, 150, 3)), # max pooling untuk mengurangi resolusi gambar tf.keras.layers.MaxPooling2D(2, 2), tf.keras.layers.Conv2D(64, (3,3), activation='relu'), tf.keras.layers.MaxPooling2D(2,2), tf.keras.layers.Conv2D(128, (3,3), activation='relu'), tf.keras.layers.MaxPooling2D(2,2), tf.keras.layers.Conv2D(512, (3,3), activation='relu'), tf.keras.layers.MaxPooling2D(2,2), tf.keras.layers.Flatten(), tf.keras.layers.Dense(128, activation='relu'), tf.keras.layers.Dense(3, activation='softmax') ])

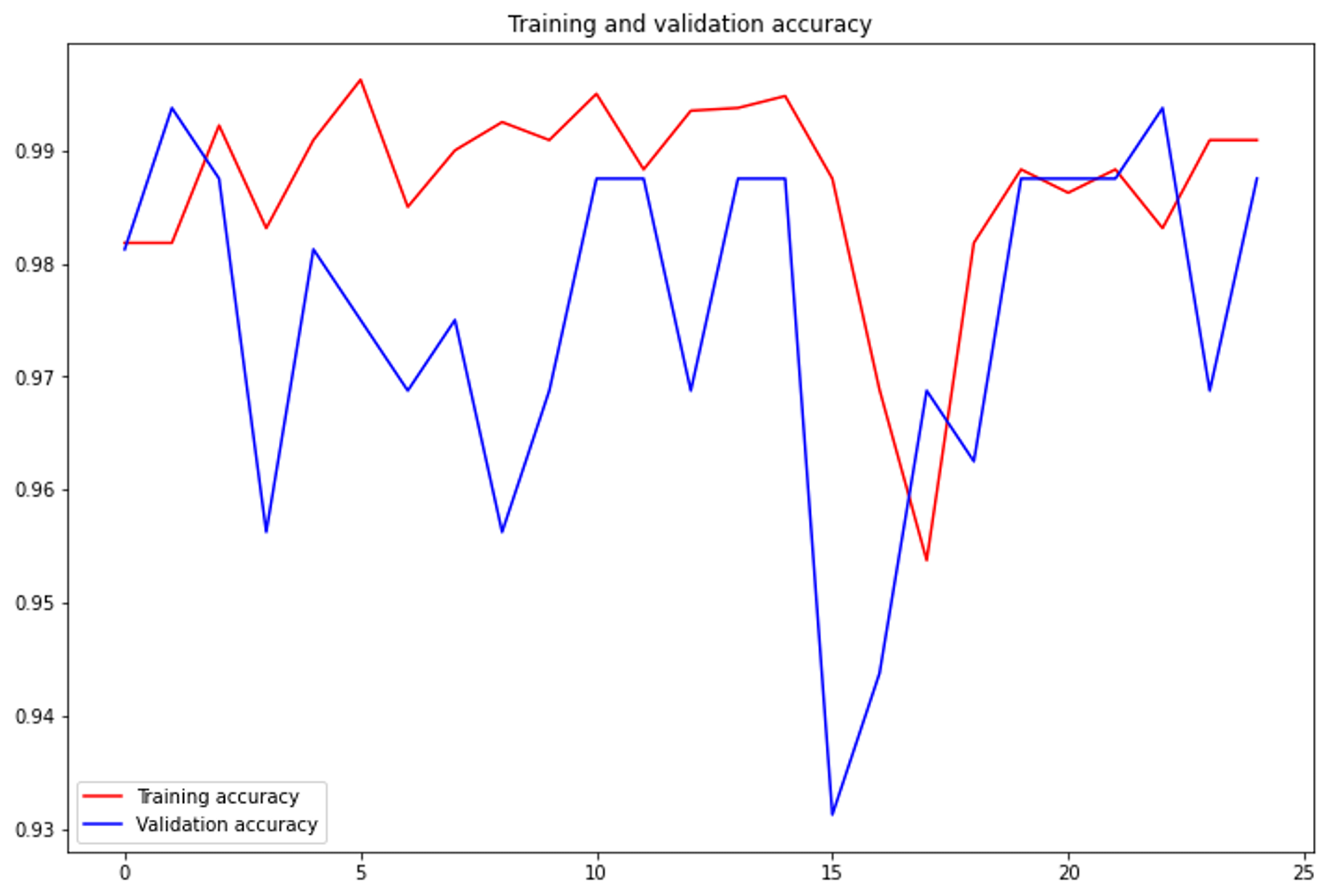

fithistory = model.fit( train_generator, steps_per_epoch=25, # berapa batch yang akan dieksekusi pada setiap epoch epochs=25, validation_data=validation_generator, # menampilkan akurasi pengujian data validasi validation_steps=5, # berapa batch yang akan dieksekusi pada setiap epoch verbose=2)

import matplotlib.pyplot as plt accur = history.history['accuracy'] val_accur = history.history['val_accuracy'] loss = history.history['loss'] val_loss = history.history['val_loss'] epochs = range(len(accur)) plt.figure(figsize=(12,8)) plt.plot(epochs, accur, 'r', label='Training accuracy') plt.plot(epochs, val_accur, 'b', label='Validation accuracy') plt.title('Training and validation accuracy') plt.legend(loc=0) plt.show()

import numpy as np from google.colab import files from keras.preprocessing import image import matplotlib.pyplot as plt import matplotlib.image as mpimg import matplotlib.pyplot as plt import matplotlib.image as mpimg %matplotlib inline # mengupload file, trus ditampung disini uploaded = files.upload() for fn in uploaded.keys(): # predicting images path = fn # memilih file gambar secara interaktif img = image.load_img(path, target_size=(100,150)) imgplot = plt.imshow(img) x = image.img_to_array(img) x = np.expand_dims(x, axis=0) # resize gambar dan mengubahnya menjadi larik numpy images = np.vstack([x]) classes = model.predict(images, batch_size=10) outclass = np.argmax(classes) print(fn) if outclass == 0: print('paper') elif outclass == 1: print('rock') else: print('scissors')